America’s Push for AI Regulation and Its Global Ripple Effect

Artificial Intelligence (AI) is no longer the technology of tomorrow—it's transforming the way we live, work, and communicate today

CURRENT AFFAIRS

7/31/20254 min read

From personalized healthcare and autonomous vehicles to content generation and predictive analytics, AI is touching nearly every sector. But as its power and presence grow, so do concerns around ethics, bias, misuse, and lack of transparency.

In response, the United States is taking concrete steps to introduce guardrails around AI technologies. This push for regulation is already influencing global standards and triggering a broader movement toward responsible AI development.

Why the U.S. Is Moving Toward AI Regulation

The exponential rise of generative AI models like ChatGPT, DALL·E, and Google Gemini has raised pressing concerns about misinformation, intellectual property theft, algorithmic discrimination, and job displacement. AI-generated deepfakes, for instance, can mimic real human speech and video, potentially undermining elections, media integrity, and trust itself.

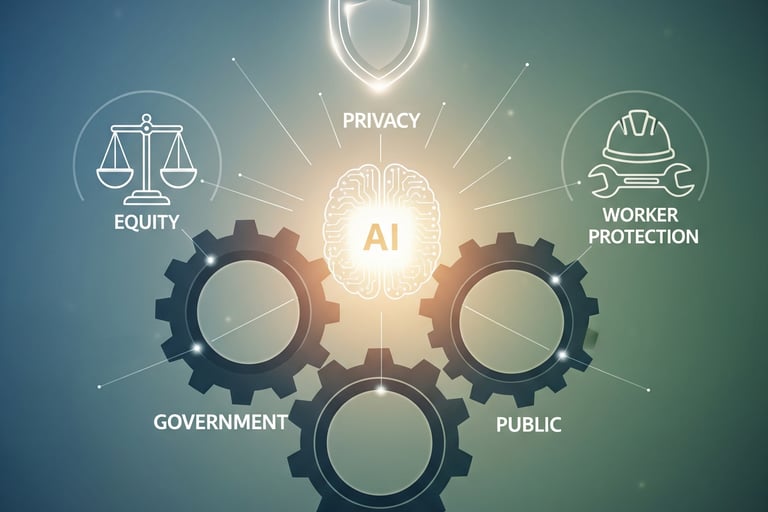

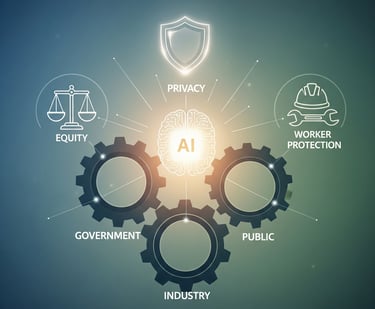

To address these challenges, the Biden administration issued the Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence in October 2023. The order lays out a roadmap for comprehensive AI governance. Key priorities include:

Ensuring AI systems are tested for safety, robustness, and reliability.

Mandating transparency in AI models and data sets.

Enforcing anti-bias protocols to prevent discrimination.

Strengthening privacy protections for users.

Promoting equity, civil rights, and worker protection.

Encouraging public-private collaboration and international partnerships.

This executive action is being supported by federal agencies like the National Institute of Standards and Technology (NIST) and the Office of Science and Technology Policy (OSTP), which are developing technical standards and best practices for trustworthy AI.

The Global Ripple Effect

1. A New Era of Global Tech Governance

America’s regulatory momentum has not gone unnoticed. Countries around the world are closely watching the U.S. approach as they craft their own policies. The European Union’s AI Act—which classifies AI tools by risk level and sets strict compliance standards—was already a pioneer. But the U.S. stepping in strengthens the likelihood of cohesive international frameworks.

Nations like Canada, India, Japan, and the United Kingdom are now actively revising their AI strategies to keep pace with U.S. policy developments and global expectations.

2. Tech Giants Adjusting to Stricter Oversight

Leading American tech companies such as OpenAI, Meta, Microsoft, and Google DeepMind are adapting their products and policies to align with new regulations.

This includes:

Enhancing model transparency and explainability.

Offering opt-out options for data collection.

Implementing content labeling for AI-generated outputs.

Increasing safeguards against bias and harmful use.

As a result, global developers must also align with these standards if they wish to access the lucrative U.S. market, effectively making American rules global defaults.

3. AI at the Heart of Global Diplomacy

AI is now a strategic priority in international relations. Forums such as the G7, G20, and the OECD are placing AI governance at the top of their agendas.

Through diplomatic engagement, the U.S. is working to:

Define shared principles for AI safety and transparency.

Prevent AI from being used for surveillance or oppression.

Foster innovation without exploitation, especially in vulnerable societies.

Develop cross-border data sharing agreements with privacy safeguards.

These efforts not only protect national interests but also set moral and ethical boundaries for how AI should function worldwide.

4. Elevating Global Standards for Ethical AI

America’s emphasis on responsible AI development is raising the global bar. Nations without existing regulatory frameworks are using the U.S. and EU guidelines as blueprints. There’s a growing consensus on the need for:

Bias audits to prevent discrimination in AI outcomes.

Model documentation (like model cards and data sheets).

Human-in-the-loop systems for decision-making in sensitive areas such as hiring, finance, and law enforcement.

Support for open-source and inclusive AI development to prevent monopolization.

Domestic and International Challenges

While the momentum is encouraging, several challenges remain:

Fragmented U.S. landscape: With no federal AI law yet in place, states like California and New York are creating their own rules, leading to a patchwork of compliance requirements.

Balancing innovation and regulation: There are fears that excessive regulation could slow down innovation or push tech development offshore.

Lagging international enforcement: While agreements are being discussed, there is still no central global body to enforce AI standards or resolve cross-border violations.

Looking Ahead: A Leadership Opportunity

The U.S. has a pivotal opportunity to lead by example. By advancing policies that foster safe, fair, and inclusive AI innovation, it can help create a world where technology enhances lives without compromising rights or democracy.

For this to succeed, regulatory efforts must:

Be collaborative, involving tech companies, civil society, academia, and the public.

Stay adaptive as technologies evolve.

Remain global-minded, ensuring alignment with international partners.

The decisions made today will shape how AI is developed and deployed tomorrow. If done right, America’s leadership in AI regulation could define not just the next wave of innovation—but also the future of trust in technology.